Can You Tell Cats Apart from Guacamole?

BLOG: Heidelberg Laureate Forum

For humans, telling cats and guacamole apart is (hopefully) a trivial task. For modern image-focused neural networks, it is also an achievable task. However, as ACM A.M. Turing Award laureate Adi Shamir showed at the 10th Heidelberg Laureate Forum this year, there is a catch: You can trick the networks.

Shamir is mostly celebrated for his work in cryptography but has made contributions to computer science outside of cryptography. Recently, he has increasingly been focusing on adversarial examples in machine learning.

Adversarial attacks are techniques used to manipulate the output of machine learning models, particularly neural networks, by introducing carefully crafted input data. In the case of an image, the end result may look very similar to the original data to an observer, but they feature very slight changes that can cause the model to make incorrect predictions or classifications. Essentially, by changing just a few key pixels in a specific way, the classifier can be completely deceived.

Researchers started figuring out that tiny perturbations can cause severe effects in algorithms in 2013, Shamir notes in a recent paper. This became even more apparent when image neural networks became really powerful.

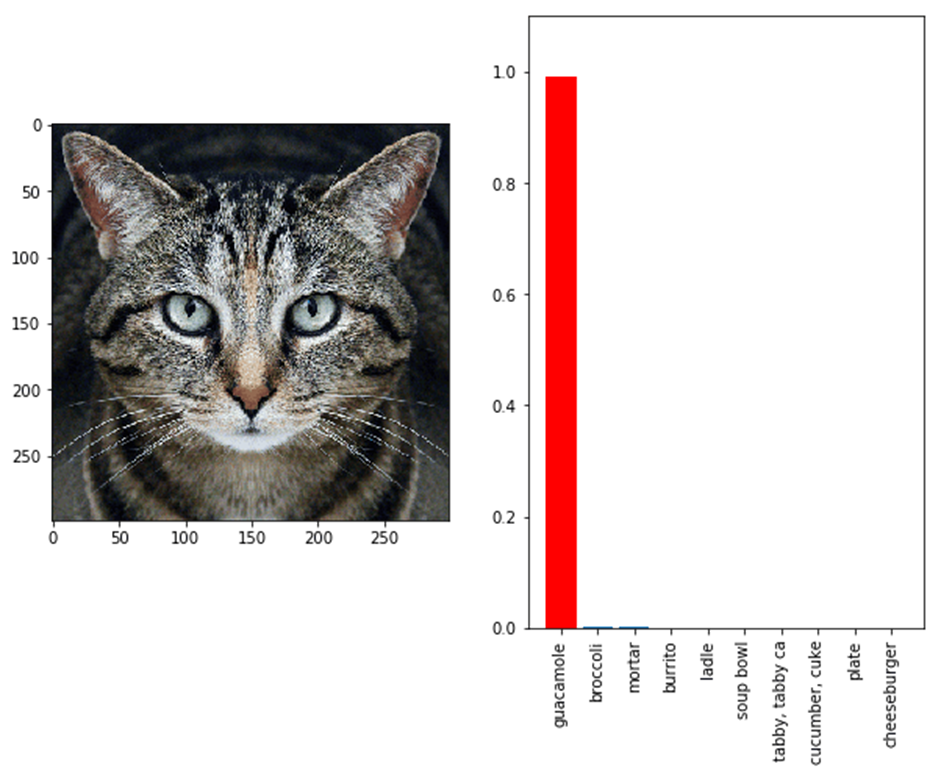

This was brilliantly illustrated in 2017 by a student-run group at MIT who picked the ever-popular cat-guacamole example to show how neural networks can be fooled. This has since become the go-to example, the “hello world” of adversarial attacks.

Adversarial attacks on image neural networks involve introducing small, often imperceptible changes to an image to deceive a neural network into misclassifying it. While the altered image still looks the same to humans, the neural network might see it differently due to the subtle manipulations. These attacks exploit the complex, high-dimensional space in which neural networks operate, finding weaknesses that cause the network to make errors. The goal can be simply to make the network misclassify the image or to misclassify it in a specific, targeted way

Imagine you have a well-trained neural network that can correctly identify a picture of a cat 99% of the time. An adversarial attack aims to subtly and maliciously modify this picture so that the neural network misclassifies it, perhaps thinking it’s a dog, while to the human eye, the image still clearly looks like a cat.

Initially, such attacks needed to know how the neural network functioned, but that is no longer the case. Attacks can work on multiple types of networks trained in different way, and there’s no clear way to prevent such attacks.

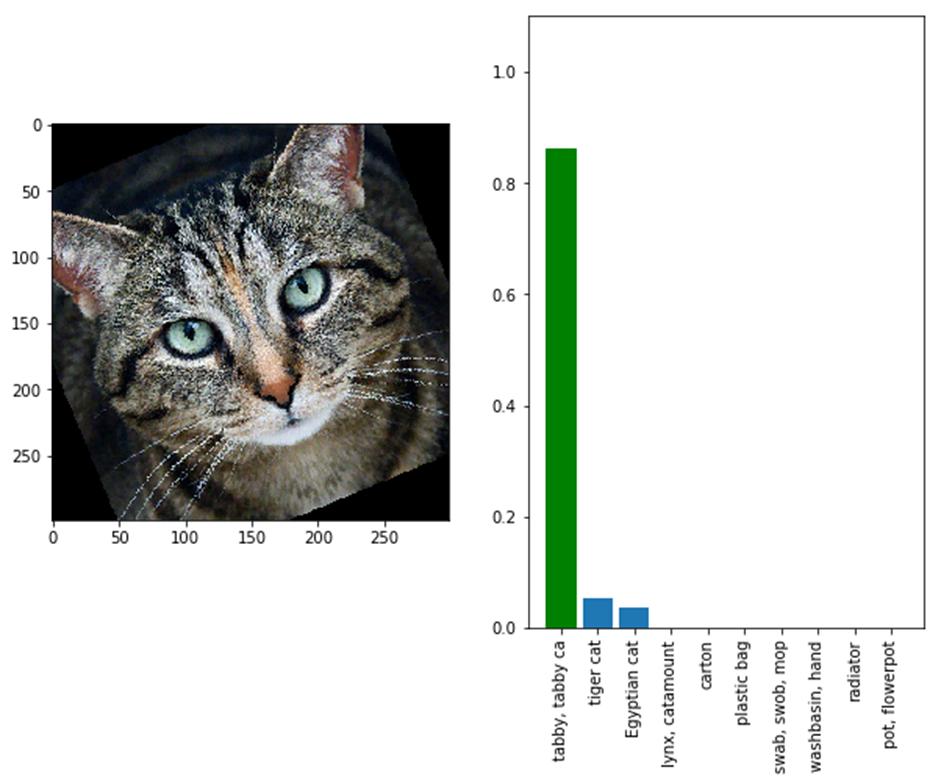

Such adversarial attacks are dangerous, but they are themselves vulnerable. Here is the same image as above, only rotated slightly: it is now correctly identified as a cat (and a tabby at that).

In his HLF lecture, Shamir proposed a framework to understand why this happens and how you can fool networks. Of course, the laureate does not want to actually attack neural networks. He wants to make them safer – and there is a very good reason for that.

Why This Matters So Much

We have been hearing some version of “AI is coming” for a decade or so. But now AI is here, in particular generative AI. The number of AI science papers has skyrocketed; the ways in which companies are using AI is surging; and we are already seeing it in our day-to-day life. You only need to look at ChatGPT to see how big of an impact a single example can have.

Nowadays, neural networks can also create strikingly complex images with ease. Research in this field has matured to the point where several companies offer such services to end users at a relatively low cost – a sign of a matured technology.

However, these networks are also fragile, Shamir notes.

It is not hard to understand why adversarial attacks can cause major problems. These attacks could be used maliciously to deceive AI systems in critical applications like autonomous vehicles, facial recognition, and medical imaging. Imagine a self-driving car that uses cameras to recognize traffic signs. An adversarial attack could subtly alter the appearance of a stop sign so that the car’s AI interprets it as a yield sign. This could lead to dangerous situations on the road. Adversarial attacks could be used to fool security systems: For example, by wearing a specially crafted pair of glasses or using a modified photo, an intruder might be incorrectly identified as an authorized user. It does not have to be a visual attack, either. With an adversarial voice attack, you could fool personal assistants like Siri or Alexa into executing commands without the user’s knowledge.

But perhaps nowhere is this as dangerous as in facial recognition.

Scarlett Johansson Is the New Guacamole

By the end of 2021, an estimated one billion surveillance cameras were in operation globally, over half of them in China. Governments and companies are increasingly looking at deploying facial recognition using these cameras. This is in itself a pretty questionable decision, but let us focus on the technical aspects.

Over the past decade, there has been an “amazing improvement in the accuracy of facial recognition systems,” says Shamir. This accuracy is now at over 99%, and given the large number of cameras it is “the only way to deal with this massive data stream,” the laureate adds.

As with the cat-guacamole example, Shamir showed that you can fool algorithms into believing one person is another. He mentions the example of Morgan Freeman and Scarlett Johansson. Algorithms believe one is the other. There was no difference for anyone else in the world, but if you want to make an attack on two people in particular, it can be done.

Shamir even detailed another type of attack he has developed. Instead of changing pixels, he mathematically modified a few weights in the neural network. This is a new attack type that he called “weight surgery.” It is extremely difficult to predict what will be the effect of such changes on the performance of the neural network, but it shows once again how vulnerable such systems can be.

“Since we don’t understand the role of the weights in Deep Neural Networks [multi-layered models often used for image generation], hackers can easily embed hard to detect trapdoors into open source models,” Shamir explained in the lecture.

So then, where does this leave us?

No Simple Solution

Being aware of the problem is an important first step. It is easy to get swept away in the hype and disregard some of the issues that can stem from it. Understanding exactly what the problem is would be the next step – this is what Shamir (and many other researchers) are working on. There is important progress being made on this front, the laureate mentions.

However, overcoming the problem is a different challenge. Simply put, we are not able to do this at the moment. There are currently no effective ways to recognize adversarial examples and their effect, the laureate notes.

So for now, the best thing to do is simply tread carefully when deploying such networks.

“Deep Neural Networks are extremely vulnerable to many types of attacks, and we should take great care in deploying them in a safe way,” Shamir concludes.

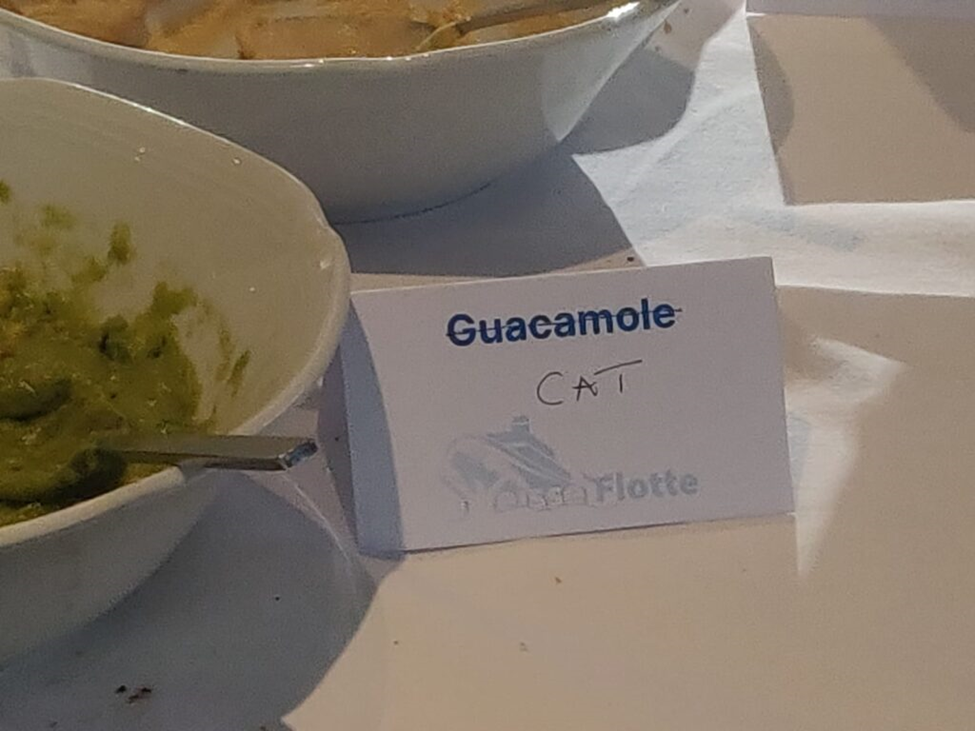

This was not lost on the young researchers in Heidelberg. During the boat trip, someone took the time to reclassify what was served as a dip. So, would you like some cat to go with the nachos?

Yesterday, I published a guest article on the subject of “THEORY AND PRACTICE OF ARTIFICIAL INTELLIGENCE“, which, among other things The term “artificial intelligence” is misleading and, on closer inspection, incorrect, because even more complex and nested algorithms based on (information) mathematical links demonstrably do not generate general methodological solutions… Interdisciplinary foresight is sometimes a huge, unsolvable problem for “AI” with fatal consequences.

Example: A computer scientist who offers courses in Python and Pandas was banned for life by Meta/Facebook. Python is a – quite popular and well-known – programming language. Pandas is a Python programme library for analysing and displaying statistical data. The assumption that his adverts were about living animals was/are based on “AI”, not human judgement. When he lodged a complaint against this, his complaint was in turn answered by “AI” – and the result was just as “meaningless”…