Bits and Neurons: Is biological computation on its way?

BLOG: Heidelberg Laureate Forum

The field of artificial intelligence (AI) has grown tremendously in recent years. It’s already being deployed almost routinely in some industries, and it’s probably safe to say we’re seeing an AI revolution.

But artificial intelligence has remained, well, artificial. Can AI — or computers — actually be organic (as in, biological)?

Researchers have tried poking at the problem from different angles, from simply learning from biological processes to using biological structures as software (or even hardware) to inserting chips straight into the brain. All approaches come with their own advantages and challenges.

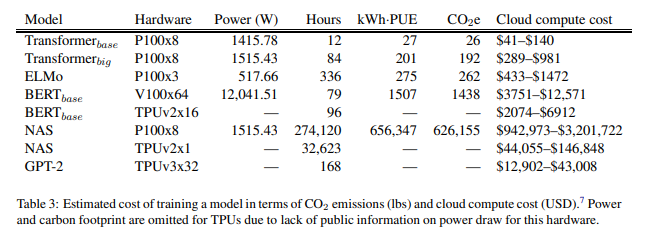

For starters, one reason to look for biological alternatives is energy. The way AI works nowadays uses a lot of energy. In a recent study, researchers at the University of Massachusetts, Amherst, found that while it takes AI models thousands of watts to train on a particular model, it would take natural intelligence just 20 watts. When you also factor in the energy costs of running a computer (which is often not based on renewable energy), it becomes even more problematic.

Here’s a breakdown of the costs and energy they calculated for various AI models:

“As a result, these models are costly to train and develop, both financially, due to the cost of hardware and electricity or cloud compute time, and environmentally, due to the carbon footprint required to fuel modern tensor processing hardware,” the researchers write.

Of course, oftentimes you don’t train one model on one dataset and that’s it — it often takes hundreds of runs, which further ramps up the energy expense. There is still some room for improvement in terms of AI efficiency, but the more complex the models become, the more energy they will likely use.

It should be said that in some fields, AI can already see patterns way beyond the current ability of the human brain, and can be developed further for years to come — but with all this used energy, the biological brain still seems to have a big edge in terms of efficiency.

After all, an AI is still only suitable for a very narrow task, whereas humans and other animals navigate myriad situations.

Organic software, organic hardware

It’s not just the software part — using biology as hardware has also picked up steam.

Various approaches are being researched. So-called “wetware computers”, which are essentially computers made of organic matter are still largely conceptual, but there have been some prototypes that show promise.

The birth of the field took place around 1999, with the work of William Ditto at the Georgia Institute of Technology. He constructed a simple neurocomputer capable of addition using leech neurons, which were chosen because of their large size.

It’s not easy to manipulate currents inside the electrons and harness them for calculation, but it worked — although the end result was a proof of concept more than anything else. For all their power and efficient use of energy, the signals within neurons often appeared chaotic and hard to control. Ditto said he believes that by increasing the number of neurons, the chaotic signals would self-organize into a structure pattern (like in living creatures), but this is a theory more than a proven fact.

Nevertheless, Ditto’s research virtually pioneered a new field, bringing it from the realm of sci-fi into reality. However, although our technological ability has improved dramatically since 1999, our understanding of the underlying biology has progressed slower. Daniel Dennett, a professor at Tufts University in Massachusetts, discussed the importance of distinguishing between the hardware and the software components of a normal computer versus what’s going on in an organic computer. “The mind is not a program running on the hardware of the brain,” Dennett famously wrote, arguing that some level of progress in cognitive science is necessary to truly make progress with this approach.

Meanwhile, approaches using other biological components have been making steady progress. A team from UC Davis and Harvard demonstrated a DNA computer that could run 21 different programs such as sorting, copying, and recognizing palindromes.

It wasn’t the first DNA computer. In 2002, J. Macdonald, D. Stefanovic and M. Stojanovic created a DNA computer capable of playing tic-tac-toe against a human player, and even before that, in 1994, researchers working in Germany designed a DNA computer that solved the Chess knight’s tour problem.

Increasingly, research is hinting at low-energy, customizable DNA computers, which offer an organic, brain-like learning system. MIT researchers have also pioneered a cell-based computer that can react to stimuli.

“You can build very complex computing systems if you integrate the element of memory together with computation,” said Timothy Lu, an associate professor of electrical engineering and computer science and of biological engineering at MIT’s Research Laboratory of Electronics, at the time.

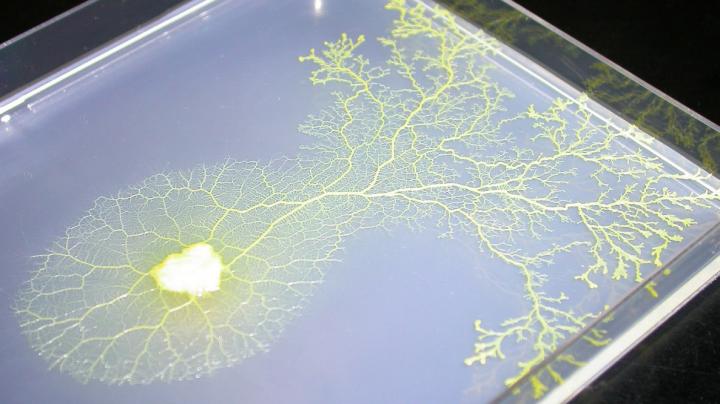

Meanwhile, a group of researchers in Japan have taken a different path: they developed an analog, amoeba-based computer that offers efficient solutions to something called ‘The Traveling Salesman Problem’ — which is very difficult for regular computers.

The Traveling Salesman problem is deceptively complicated: it asks the solver a seemingly simple question. You’re visiting a number of different cities to sell your products (each city just once) and must then return home. Given all the distances, what’s the shortest possible route you can take?

If you try to compute it (solve all the possible combinations), it becomes immensely difficult (although some algorithms and heuristics do exist). Amoebas placed in a system with 64 ‘cities’ (areas with nutrients that the amoeba wanted to get to) were able to solve the problem remarkably fast. They were still a bit slower than the very fastest machines, but they were close — and if our best, energy-intensive machines can barely beat a simple amoeba, maybe it’s an avenue worth researching more.

The other way around

Using biological structures for computation is one approach. Another is merging biology with chips, using the latter to augment the former. In this field too, things have advanced at a quick pace.

For instance, in 2020, Elon Musk made headlines (as he so often does), unveiling a pig called Gertrude that had a coin-sized chip implanted into her brain.

“It’s kind of like a Fitbit in your skull with tiny wires,” the billionaire entrepreneur said on a webcast presenting the achievement. Earlier this year, his start-up presented something even more remarkable: a monkey with a brain chip that allowed it to play computer games remotely.

The monkey first played computer games normally, with a joystick. The chip recorded the brain activity when an action was performed, and it wasn’t long before the monkey was able to play the game directly with brain signals and no actual touching.

Musk is applying for human trials next year, and several other researchers are also looking at different ways injectable chips may be used in humans.

It’s still early days, but more and more, we’re seeing ways through which organic matter and computers interact directly. There are different approaches, all with their advantages and drawbacks, and while we’re not looking at organic robots or AI just yet, but with the way things are progressing, those don’t seem nearly as far-fetched as a mere decade ago.

Intertwining the biological with the digital offers distinct advantages, from energy efficiency to optimized computation, but at the same time, this is not an easy challenge — and not a pursuit free of concern. When the natural and artificial worlds merge (as in the case of brain chipping, for example) the waters can quickly become murky.

The implications and ethics of the field should be considered carefully. Too often, these implications come as little more than an afterthought. The iconic quote from Jurassic Park pops to mind — and it’s perhaps something the Elon Musks of the world should also think about:

Ian Malcolm [Jeff Goldblum]: Yeah, yeah, but your scientists were so preoccupied with whether or not they could, that they didn’t stop to think if they should.

The most promising approach to AI computing is not really mentioned in this article, namely neuromorphic computing.

Neuromorphic computing opens up the opportunity to build artificial neural networks in hardware, as opposed to the current approach of simulating artificial neural networks in software.

Currently, neuromorphic computing is not the best approach because a general-purpose computer is better suited to experiment with entirely new architectures, and new AI architectures appear every two or three years as we are in the midst of an AI revolution. But once the best architecture is found and the field is settled, that architecture can be materialized in optimal hardware, and that hardware will be neuromorphic.

Why 1 kilogram of brain can outperform a 100-ton supercomputer.

Today’s silicon chips have structures 1000 times smaller than a neuron, and they operate 1 million times faster than neurons. And in fact, a supercomputer can multiply two matrices 1 billion times faster than a human.

This all changes when we move to higher cognitive functions or even simple perception and recognition. Here, the human brain outperforms the computer in many cases. And why? Because the brain is an evolutionary product in which software and hardware are tightly interwoven.

Current, mainly software-oriented attempts to emulate the brain are relatively recent – recent compared to the millions of years nature has spent optimizing the brain.

When the time comes that engineers have realized what the essence of human brain activity is, it will only take a small chip to emulate the brain. It is the lack of knowledge that is responsible for the poor performance of today’s computers in emulating the brain.

This will probably take much longer than most curious scientists and laymen would expect or hope. I would rather share Roger Penrose’s opinion, that there are obviously processes in the brain, which are far too complex for being describable with recent knowledge of physical processes. Roger Penrose is a theoretical physicist, who is very engaged in neuroscience. He is also linked with leading neuroscientists. He speculates, it will probably take generations of scientists to get there. Finding an unifying theoretical model of gravitation and quantum theory is just a first step, opening a possibility for a better understanding of the powerfully orchestrated physical processes in a living brain.

Wether …it will only take a small chip to emulate the brain… could be better judged thereafter.

@ralph: Time will tell

Brain Computer Interfaces as the Last and Ultimate User Interface.

Computer interfaces evolved from punched cards and punched tape as input and line printer text as output to command line interfaces, then to graphical user interfaces, and now to touch screens and voice-based dialog systems.

The next and final interface could be thoughts alone and the output could be voices and images superimposed on what is perceived. Almost like the hallucinations of the mentally ill only now as messages, as the output form of the computer that becomes my second self.

Brain-computer interfaces are the most direct way to communicate with a computer, and there is a belief that a computer reading my brain can instantly respond to my thoughts and do what I intend. But this is not really true, because my thoughts and ideas are more often just thoughts and ideas and not intended to be executed immediately. This is illustrated by the mind pong mentioned above. The monkey uses a joy stick, which is not connected to the computer, but is still moved by the monkey, and movement means that you really intend to do something, not just think about something. But then the second part of Mind Pong shows that playing with thoughts alone is possible: the monkey only thinks and does not move its hands to play Pong. But in this case, the computer knows that the monkey intends to play because it intentionally goes to the playing station.

If my thoughts are constantly monitored by a Brain Computer Interface, the computer has much more difficulty recognizing what is just a dream, an imagination, and what I really intend to do.

For healthy people, a Brain Computer Interface could be the best way to tell a computer what is on my mind and what I am feeling. This could both improve my life to an unprecedented degree, but also make my life hell if used as torture.

1) In Near-Death Experience (NDEs) we can perceive as a conscious experience how a single stimulus/thought is processed by the brain. Step-by-step.

(with Google-search [Kinseher NDERF denken_nte] a free-read PDF can be found, German language)

But this DIRECT ACCESS to the working brain is completely IGNORED by science up to now. This is one reason why scientist don´t understand the procedures of the working brain. **)

2) Tiny electrodes in the brain will be destroyed by the brain´s biological liquid very soon. Therefore: monkeys with elecrodes on the brain´s surface – seem to be nice experiments, for a short time, but in reality this is only cruelty to animals (Tierquälerei).

Additional: Electrodes which are put to the surface of the brain – will move. This does mean that this equipment need permanently to be recalibrated

3) Thoughts have no permanent duration. This does mean – that a continuous working procedure over a long period is not possible!

to **) – some examples of procedures which we can perceive with NDEs

A) We can describe THINKING as a very simple pattern matching activity – by three simple rules (page 4 of my PDF)

B) Experiences are STACKED UP in the brain in hierarchical order – this does mean that no time-coding is necessary. This reduces the amount of data which are necessary to store/recall memories: a reduced amount of data allow to increase the speed of processing

C) an important detail to increase the speed of neuronal processing is PRIMING: Priming allow to react 1/3 faster – as without priming (to react on a stimulus needs 200 Milliseconds – with priming, without priming 300 milliseconds are necessary to react)

A), B), C) are only three examples which can be found when scientists would study NDEs

@Richard (citation): Tiny electrodes in the brain will be destroyed by the brain´s biological liquid very soon. … Additional: Electrodes which are put to the surface of the brain – will move.

Yes: todays electronics is incompatible with wetware.

That‘s the the reason I wrote in an much earlier comment (some years ago) that the ultimate brain computer interface will come in the form of a genetically modified brain, which will be able to communicate by optics or radiowaves.

@Holzherr

1) In NDEs we can perceive as a conscious perception how a single stimulus is processed by the brain (contents, structures).

To ignore this direct access to our brain is ´science´ of a very poor quality.

2) A book of Julia Shaw – describe very good how bad our brain is working.

AI-researchers which want to create AI-products which are as ´good´ as our brain – should read this book

´The Memory Illusion. Remembering, Forgetting and the Science of False memory´

´Das trügerische Gedächtnis – Wie unser Gehirn Erinnerungen fälscht´

In other words: AI-research which want to create products which are as ´good´ as our brain – to produce rubbish is a waste of money

3A) I do not want to discuss fairy-tales and/or similar nonsense: Genetically modified brains are still brains – with the bad quality of brains.

As long as we do not understand the working prodedures of our actual brain – it makes no sense to create modified brains.

Serious researchers should at first try to understand our actual brain – before thinking about modifications

3B) A communication with optical systems or radiowaves makes not sense – because a THOUGHT has no duration/permanence

@KRichard (quote): ) I do not want to discuss fairy tales and/or similar nonsense: Genetically modified brains are still brains – with the poor quality of brains.

Answer: But gene therapy of parts of the brain to restore lost functions is already a reality.

Example: The retina is an outgrowth of the brain, and just a few weeks ago, gene therapy of the retina of a blind patient (due to retina pigmentosa) partially restored his vision by inserting a gene into his retina that expresses a light-sensitive protein found in algae. The patient still needs special glasses to translate natural light into the frequencies to which the algae protein is most sensitive, but he can see again – at least partially.

( See article Gene from algae helped a blind man regain some of his vision)

As for the (quote) bad quality of brains, there is one overarching truth: any recognition, even that of a sophisticated artificial intelligence is fallible, is inherently a construct that can go wrong. People subconsciously know this and make jokes about it

@Holzherr

A genetically modified receptor is not a modified brain.

A receptor is a sensory nerve ending that transforms specific stimuli into nerve impulses.

@KRichard: optogenetically modified neurons used in brain research are a first step to a genetically modified brain.

Here some excerpts from the article Optogenetics: Shedding light on the brain’s secrets

Conclusion: Optogenetically modified neurons are already used in brain research and clinical applications will follow, as can be seen in the article The potential of optogenetic cochlear implants

@KRichard: das Thema Optogenetik wurde auf Spektrum.de schon früh und mehrmals behandelt. Links auf die wichtigsten Artikel finden sich unter Optogenetik – Spektrum der Wissenschaft

Is BERT a power hog?

Quote from the article above: In terms of CO2 equivalents, a BERT workout emits about as much as a round-trip flight between New York and California.

My contention: the BERT is the opposite of an electricity guzzler.

My story supporting my claim:

BERT is a pre-trained language model that can be fine-tuned for a variety of purposes, such as answering student questions about legal or medical issues or artificial intelligence techniques.

BERT is already being used in several interactive online training courses, where it can answer student questions and collect student problems that can then be further addressed by human instructors.

BERT requires only pretraining and finetuning for one application domain. Finetunings are quite economical in their use of computer resources.

Conclusion: The CO2 emissions for a BERT pre-training/finetuning are equivalent to a round trip from New York to California, but it can serve thousands of students and the CO2 emissions per student are low.

Compare this to a new finance app written by a former coworker of mine. This collaborator has traveled to South Africa several times to present his new app. I doubt his finance app is of the same scale and importance as BERT.

@Holzherr

Prof. José Delgado provoked 1964 a bull to perform an attack – and stopped this attack by a remote control equipment.

[Quelle: Reto Schneider: Das Buch der verrückten Experimente]

Deep brain stimulation by implanted electrodes is useful for persons who suffer from Parkinson´s disease and/or epilepsy.

This is very nice – but:

The topic of this blog is the question if biological computation is becoming reality.

My hints described big problems to achieve this aim: As long as scientist ignore and neglect it to study how the brain is working – they will never understand it.

@KRichard (Quote): The topic of this blog is the question if biological computation is becoming reality.

Answer: Yes there will be Brain Computer Interfaces and yes, the Interface must be more biocompatible than todays wires, but no, most future software will be running on silicon not on cells. Because silicon is superior to biological wetware.

Supplement: Quote:

Answer: Computers can actually be organic and there is an overarching sample for this: the human brain.

@Holzherr

You don´t understand the real problem:

Up to now scientists don´t understand how the brain is working, to modify the brain without understanding it´s working procedures – is bungling.

In ´Near-Death Experiences´(NDEs) – we can perceive as a conscious experience how the brain is processing a single stimulus/thought: step-by-step.

To ignore this direct access to the working brain is one reason why science has big problems to understand the working brain. To ignore this wonderful access to our brain´s working strategies completely – is very embarrassing and stupid.

The structures/contents of NDEs are well known since 1975 – but up to now sciences does not analyse it.

To find, to identify and to analyse identical pattern/structures is one of the best methods in science to understand problems and to create new ideas/theories.

@KRichard (quote): Up to now scientists don´t understand how the brain is working, to modify the brain without understanding it´s working procedures – is bungling.

Todays researchers and engineers do not want modify the brain to enable something totally new. They only want to modify it, so that it can communicate better and more directly with the environment and with electronics.

Even artificial intelligence works currently different than the brain. Todays AI-applications only do one thing. They don‘t know what they do, they have no self, no own curiosity, no autonomy. An AI applications which has learnt to recognize some objects can only recognize the learned objects and is not even able to notice that a new object is not covered by the trainig set. With other words: the openness of a natural Being is missing from current AI programs.

Open-world Machine Learning has not yet materialized, but it will materialize. In 20 years perhaps.

It would be a good idea to read the Wikipedia-article [José Manuel Rodriguez Delgado] – and several articles who can be found there by a link.

With these texts we can learn a lot about the ideas of electric brain stimulation.

There is no doubt about the uniqueness of each persons individual brain structure/contents. This is a big problem – because it is not possible to develop standardized equipment for brain stimulation.

Warum tut sich KI so schwer?

Die Antwort liegt in der reduktionistischen Vorgehensweise. Leben kann nicht auf Elemente reduziert werden, sondern auf Prinzipien. Und was ist das Prinzip von Leben? Selbstorganisation. Und der Kern von Selbstorganisation ist Wachstum, genauer Wachstum und in der Folge Reduktion. Also das, was jeden Metabolismus ausmacht. Und was ist Wachstum? Es ist die Agglomeration von Kompatiblem (nicht Gleichem, das ergäbe kein Wachstum). Diesen Vorgang finden wir beim Denken, als Assoziation von Wahrgenommenem. Wir verknüpfen etwa ein grünes Gewächs mit dem Begriff ‚Baum‘ sowie mit einer Bewertung. Nehmen wir Bäume wahr, die andere Eigenschaften haben, überlagern sich Muster und bilden ein virtuelles (kein technisches) Hologramm. Die Reduktion von ansonsten ausufernden Überlagerungen erfolgt durch Abstraktion, die der Schweizer Psychologe Jean Piaget Akkomodation nannte. Aus 1+1+1 wird so 3×1 und danach 1³. So wird komplexes Denken reduziert. Assoziation ist ein aktiver Prozess als ständiges ‚Probieren‘. Piaget nannte es Entwicklung mit ‚Übersteigungen‘. Es ist der Versuch, die bisherige Erfahrung zu erweitern – entweder er ist erfolgreich oder es ist ein Irrtum. Nach diesem Versuch – Irrtum – Prinzip verläuft Lernen (schulisches also betreutes – Lernen zeigt die richtigen Wege und verhindert Irrtümer). Aus vielen virtuellen Hologrammen setzt sich (ontogenetisch) ein übergreifendes Hologramm zusammen, das sich als Steuerungsinstanz etabliert (psychologisch: ICH). Mit Tononi findet sich dort die maximale integrierte Information (und stellt Bewusstsein her).

Die Bewertung erfolgt beim Menschen durch einen Abgleich mit Normen (Freud: Über-Ich) und vitalem Status (vegetatives Nervensystem). Bewertung ist das, was wir Emotion nennen.

Das Versuch – Irrtums – Prinzip ähnelt zwar dem Prinzip Mutation – Selektion, ist aber grundlegend anders. Letzteres kann die extrem hohe Anpassung von Leben an Umwelt einzig durch zufällige endogene Mutation nicht hinreichend erklären. Woher sollte etwa der Zufall wissen, dass er Farbmutationen generieren soll, damit ein Käfer in grünem Habitat grün wird. Und so führt die Anwendung dieses Prinzips auch bei KI nicht zum Erfolg (Erfolg = KI konvergiert mit NI).

@Wolfgang Wegmann (Zitat): Warum tut sich KI so schwer?

Meine Antwort dazu: Weil KI Künstliche Intelligenz ist und nicht Künstliches Leben. Der Versuch eine nackte (künstliche) Intelligenz zu schaffen ist ähnlich dem Versuch, den Menschen auf sein Gehirn zu reduzieren. Deshalb fehlt KI die Autonomie und der Lebenshintergrund.

@Hozherr: Ich denke, das Embodiment ist das geringste Problem der KI. Menschenähnliche Roboter wären ohnehin nicht aus Fleisch und Blut. Emotionen und deren Verstetigung, also die Gefühle, sind nichts anderes als Bewertungen des Körperzustandes (auf der ‘gegenüberliegenden’ Seite werden Normative bewertet). Und den kann man simulieren. Das Entscheidende ist, wie ‘wächst’ Denken aktiv in Möglichkeitsräume hinein und erschließt sich damit Realität.

@Wolfgang Stegenann (Zitat): Ich denke, das Embodiment ist das geringste Problem der KI.

Zustimmung, nicht das fehlende Embodiment sondern die fehlende Ausrichtung auf Autonomie und damit auf ein Ziel wie „Überleben“ ist das Problem. Organismen müssen im Gegensatz zu AI-Programmen nicht nur eine bestimmte Aufgabe bewältigen können, sondern sie müssen so robust sein, dass sie Überleben.

Heutige AI-Programme sind aber nicht robust. Das zeigt sich etwa darin, dass ein KI-Klassifikationsprogramm, welches darauf trainiert wurde 100 verschiedene Tiere zu erkennen, anschliessend nicht in der Lage ist zu erkennen, dass ein Auto kein Tier ist. Es kann also nicht mit Objekten umgehen die ausserhalb des Trainingssets liegen, das sogenannte Out-of-Distribution Problem. Das ist ein Problem, das zwar von AI-Forschern erkannt wurde, bisher aber nicht befriedigend gelöst wurde, weil ihre Programme bisher nicht wirklich mit der Realität konfrontiert wurden. Im Google-AI-Blog liest man dazu unter dem Titel: Improving Out-of-Distribution Detection in Machine Learning Models:

Mit andern Worten: ein Programm das nur gelernte Bakterienarten erkennen kann, aber nicht in der Lage ist zu erkennen ist, dass in den Daten möglicherweise ein neues Bakterium auftaucht, welches aber noch keinen Namen hat, solch ein Programm ist in der realen Anwendung problematisch. Aber heutige Klassifikationssysteme haben dieses Problem immer noch. Zusammen mit anderen Schwächen in der Robustheit.

Is biological computation on its way? Yes on its way for biological applications

Computational DNA and computing cells are used for medical applications such as targeted drug application, for precision medicine, not for high-performance computing.

Memristor based neuromorphic computing may be able to implement GPT-3

GPT-3 has 175 billion machine learning parameters. This corresponds to 175 billion synaptic weights or in conventional hardware to at least 175 billion memory cells or 175 Gigabytes.

Only a neuromorphic platform having memristors as realisation of synaptic weights may be able to support such a large memory requirement on a single chip.